QML으로 간단하게 TimeTimer라는 프로그램을 제작하면서 다른 기능도 사용해 보겠습니다.

VIDEO

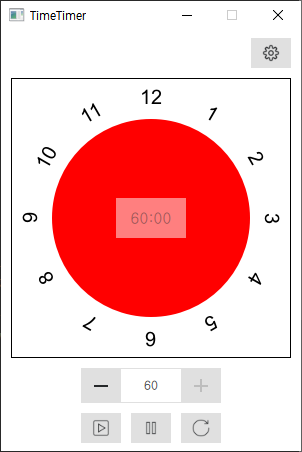

TimeTimer는 위와 같이 생긴 시계인데 예전에 크롬 확장 프로그램 만들기 에서도 진행했었는데 예제로 사용하기 괜찮아서 이번에는 Qt/QML로 한번 만들어보면서 익숙해져보려 합니다.

크롬 확장프로그램 Time Timer 개발하기 - 4

이제 만들어진 시계 타이머 둘레에 숫자를 입력해보겠습니다. 현재 만든 시계의 좌표는 아래와 같습니다. 이 원의 둘레 주변에 1 ~ 12의 숫자를 넣어주려고 합니다. 우선 간단하게 12시에만 숫자

1d1cblog.tistory.com

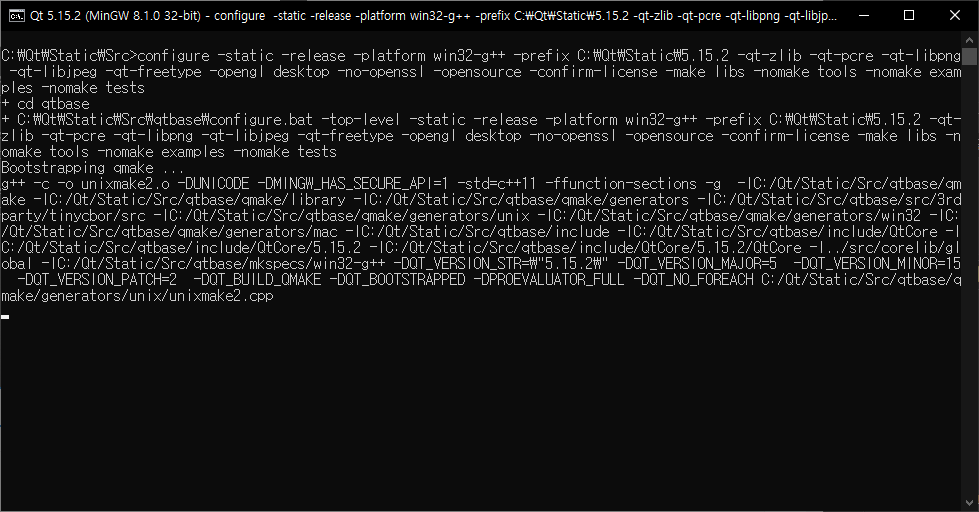

먼저 완성된 UI부터 보여드리겠습니다. Main 화면 UI입니다. 가장 위부터 설정할 수 있는 window를 띄워줄 Button, 시계 UI, 시간 설정할 수 있는 Spinbox, Start / Pause / Stop Button입니다. 시간 UI에는 남은 시간도 보여줄 수 있는 Label도 있습니다.

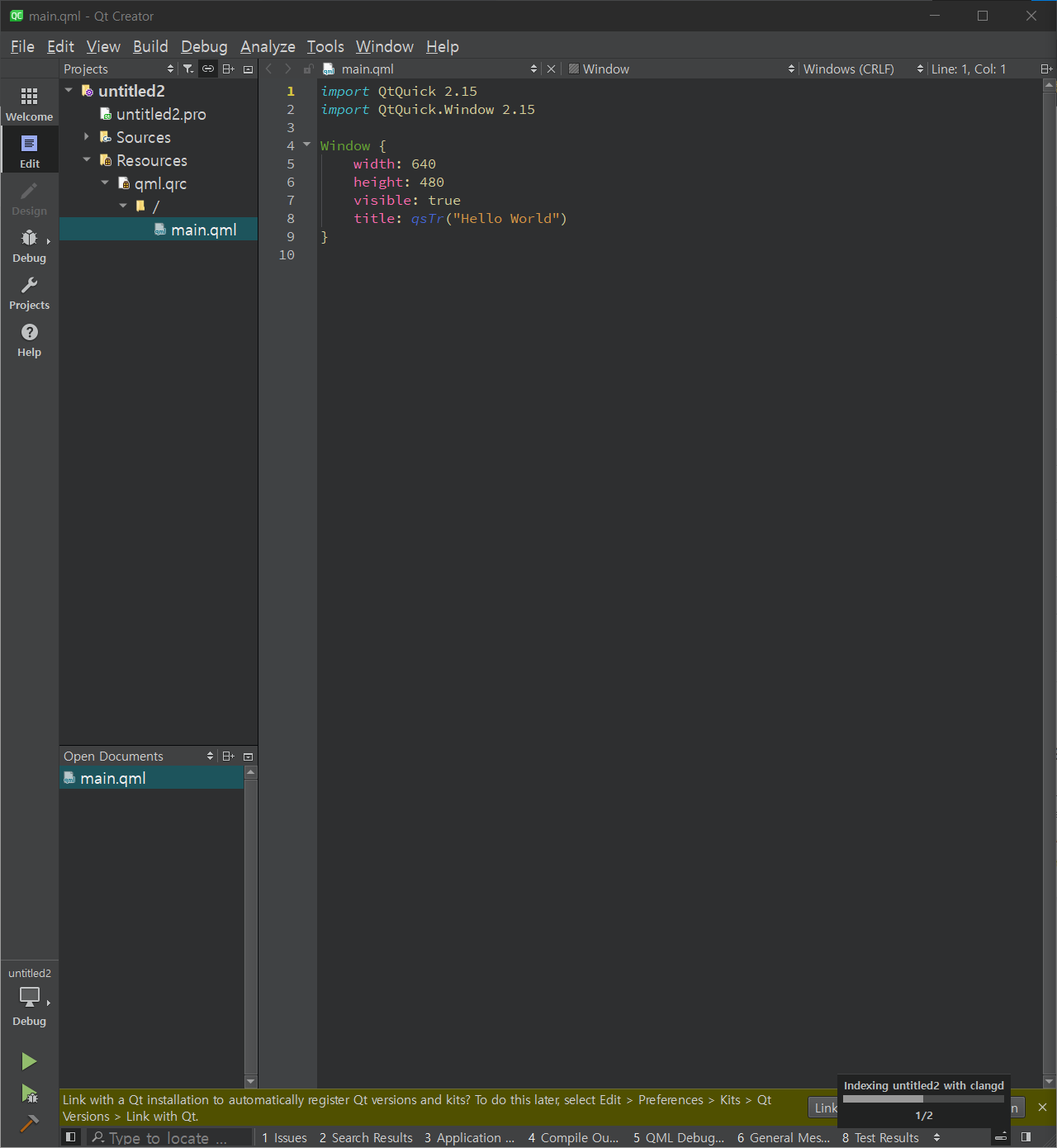

UI에 사용된 TimeTimer.qml 파일입니다.

import QtQuick 2.15

import QtQuick.Window 2.15

import QtQuick.Controls 2.15

import QtQuick.Layouts 1.15

Window {

id :windows

property int windowWidth : 300 ;

property int windowHeight : 420 ;

property double dOpacity: 1

property bool bAlwaysOnTop : false

opacity : dOpacity

width : windowWidth

height : windowHeight

minimumWidth : windowWidth

maximumWidth : windowWidth

minimumHeight : windowHeight

maximumHeight : windowHeight

visible : true

signal emit_Start();

signal emit_Pause();

signal emit_Stop();

Item {

anchors.fill: parent

ColumnLayout {

spacing : 10

anchors.centerIn : parent

Button {

Layout.preferredWidth: 40

Layout.preferredHeight:30

Layout.alignment: Qt.AlignRight

icon.name: "setting"

icon.source: "qrc:/icon/setting.png"

onClicked : {

timerMgr.showDialog()

}

}

Rectangle {

border.width: 1

Layout.preferredWidth: 280

Layout.preferredHeight:280

Canvas {

id :backgroundCanvas

anchors.fill: parent

property int centerX: parent.width / 2

property int centerY: parent.height / 2

property int radius : parent.width / 2 - 39

property color backColor : "white"

onPaint : {

var ctx = backgroundCanvas.getContext("2d" );

ctx.beginPath()

ctx.fillStyle = backColor

ctx.arc(centerX, centerY, radius, 0 , 2 *Math .PI)

ctx.fill()

ctx.closePath()

}

}

Canvas {

id :timerCanvas

objectName :"timeCanvas"

anchors.fill: parent

property int centerX: parent.width / 2

property int centerY: parent.height / 2

property int radius : parent.width / 2 - 41

property int setMinute: 60

property int setSecond: setMinute*60

property color timeColor : "red"

onPaint : {

var time = setSecond;

var end = (45 -(time/60 ))*6 ;

var ctx = timerCanvas.getContext("2d" );

ctx.reset()

ctx.beginPath()

ctx.fillStyle = timeColor

ctx.moveTo(centerX,centerY);

ctx.arc(centerX, centerY, radius, (Math .PI/180 )*end, (Math .PI/180 )*270 );

ctx.fill()

ctx.closePath()

}

function rePaint (id :clockNumber

anchors.fill: parent

property int centerX: parent.width / 2

property int centerY: parent.height / 2

onPaint : {

var ctx = clockNumber.getContext("2d" );

ctx.font = "20px sans-serif" ;

ctx.textAlign = "center" ;

ctx.textBaseline = "middle" ;

ctx.fillStyle = "black" ;

ctx.beginPath()

ctx.translate(centerX,centerY);

for ( var number = 1 ; number <= 12 ; number++ ){

var angle = (Math .PI / 180 ) * 30 ;

ctx.rotate( angle );

ctx.fillText( number, 0 , -120 );

}

ctx.closePath()

}

}

Rectangle {

anchors.centerIn: parent

opacity : 0.5

width : 70

height : 40

Label {

id :remainTime

anchors.fill: parent

horizontalAlignment : Text.AlignHCenter

verticalAlignment : Text.AlignVCenter

font.pixelSize: 15

text : (parseInt (timerCanvas.setSecond / 60 )).toString().padStart(2 ,'0' )

+ ":" + (parseInt (timerCanvas.setSecond % 60 )).toString().padStart(2 ,'0' )

}

}

}

SpinBox {

id :spinBox

Layout.alignment: Qt.AlignHCenter

Layout.preferredHeight:35

from : 0

value : 60

to : 60

stepSize : 1

editable : true

onValueChanged : {

timerCanvas.setMinute = value

timerCanvas.requestPaint()

}

Keys.onPressed: {

if ( event.key === Qt.Key_Enter || event.key === Qt.Key_Return ) {

focus = false

}

}

}

RowLayout {

spacing : 10

Layout.alignment: Qt.AlignHCenter

Button {

Layout.preferredWidth: 40

Layout.preferredHeight:30

icon.name: "start"

icon.source: "qrc:/icon/start.png"

onClicked : {

windows.emit_Start()

}

}

Button {

Layout.preferredWidth: 40

Layout.preferredHeight:30

icon.name: "pause"

icon.source: "qrc:/icon/pause.png"

onClicked : {

windows.emit_Pause()

}

}

Button {

Layout.preferredWidth: 40

Layout.preferredHeight:30

icon.name: "reset"

icon.source: "qrc:/icon/reset.png"

onClicked : {

windows.emit_Stop()

}

}

}

}

}

}ui를 좀 더 자세히 뜯어서 보겠습니다. Start / Pause / Stop Button을 클릭했을 때 Signal을 발생할 수 있게 Signal을 선언해 놨습니다.

Window {

id :windows

property int windowWidth : 300 ;

property int windowHeight : 420 ;

property double dOpacity: 1

property bool bAlwaysOnTop : false

opacity : dOpacity

width : windowWidth

height : windowHeight

minimumWidth : windowWidth

maximumWidth : windowWidth

minimumHeight : windowHeight

maximumHeight : windowHeight

visible : true

signal emit_Start();

signal emit_Pause();

signal emit_Stop();

UI들은 ColumnLayout과 RowLayout으로 배치해 주었습니다. 해당 Layout을 사용하기 위해서는 QtQuick.Layouts를 import 해주어야 합니다. 가장 상단에 있는 버튼에는 클릭 시 Q_INVOKABLE로 등록된 함수를 호출해주고 있습니다.

Item {

anchors.fill: parent

ColumnLayout {

spacing : 10

anchors.centerIn : parent

Button {

Layout.preferredWidth: 40

Layout.preferredHeight:30

Layout.alignment: Qt.AlignRight

icon.name: "setting"

icon.source: "qrc:/icon/setting.png"

onClicked : {

timerMgr.showDialog()

}

}

...

}

}다음으로 메인인 시계 부분입니다. Canvas를 사용하였고 qml도 자바스크립트를 사용하기 때문에 자바스크립트 함수 사용이 가능했는데 덕분에 이전 예제에서 사용했던 코드를 그대로 사용할 수 있었습니다.

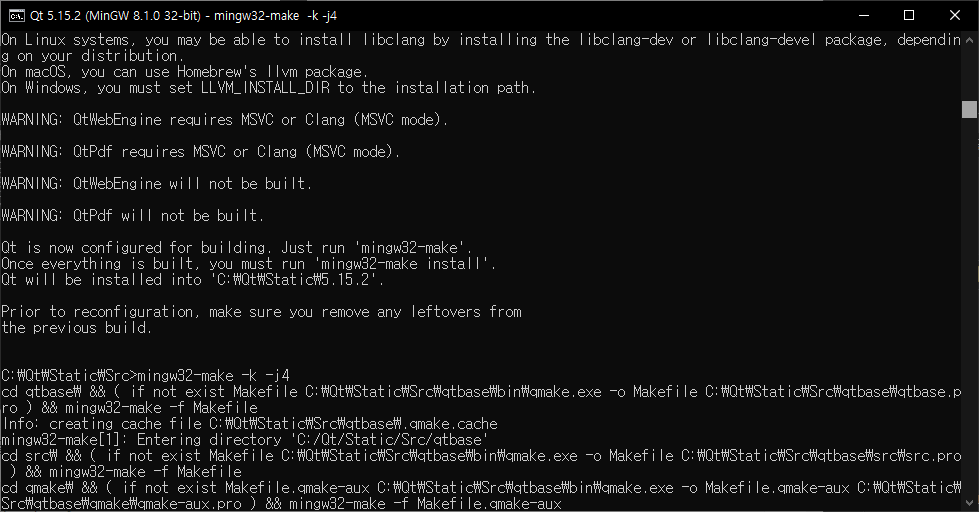

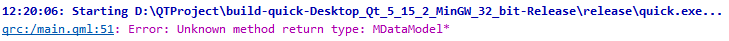

추가로 남은 시간을 그려주는 timerCanvas에는 function이 하나 있는데 이 함수 호출 시에 requestPaint 함수가 호출되어 paintEvent가 발생하게 됩니다. 이 rePaint 함수는 cpp에서 QMetaObject::invokeMethod를 통해 qml의 함수를 직접 호출하고 있습니다.

Rectangle {

border.width: 1

Layout.preferredWidth: 280

Layout.preferredHeight:280

Canvas {

id :backgroundCanvas

anchors.fill: parent

property int centerX: parent.width / 2

property int centerY: parent.height / 2

property int radius : parent.width / 2 - 39

property color backColor : "white"

onPaint : {

var ctx = backgroundCanvas.getContext("2d" );

ctx.beginPath()

ctx.fillStyle = backColor

ctx.arc(centerX, centerY, radius, 0 , 2 *Math .PI)

ctx.fill()

ctx.closePath()

}

}

Canvas {

id :timerCanvas

objectName :"timeCanvas"

anchors.fill: parent

property int centerX: parent.width / 2

property int centerY: parent.height / 2

property int radius : parent.width / 2 - 41

property int setMinute: 60

property int setSecond: setMinute*60

property color timeColor : "red"

onPaint : {

var time = setSecond;

var end = (45 -(time/60 ))*6 ;

var ctx = timerCanvas.getContext("2d" );

ctx.reset()

ctx.beginPath()

ctx.fillStyle = timeColor

ctx.moveTo(centerX,centerY);

ctx.arc(centerX, centerY, radius, (Math .PI/180 )*end, (Math .PI/180 )*270 );

ctx.fill()

ctx.closePath()

}

function rePaint (id :clockNumber

anchors.fill: parent

property int centerX: parent.width / 2

property int centerY: parent.height / 2

onPaint : {

var ctx = clockNumber.getContext("2d" );

ctx.font = "20px sans-serif" ;

ctx.textAlign = "center" ;

ctx.textBaseline = "middle" ;

ctx.fillStyle = "black" ;

ctx.beginPath()

ctx.translate(centerX,centerY);

for ( var number = 1 ; number <= 12 ; number++ ){

var angle = (Math .PI / 180 ) * 30 ;

ctx.rotate( angle );

ctx.fillText( number, 0 , -120 );

}

ctx.closePath()

}

}다음은 시간 잔량을 표시해 주는 부분입니다. 여기에는 padStart라는 남은 시간이 한 자리여도 2자리 수로 볼 수 있게 채워주는 padStart 함수가 있기에 따로 설명을 했습니다.

Rectangle {

anchors.centerIn: parent

opacity : 0.5

width : 70

height : 40

Label {

id :remainTime

anchors.fill: parent

horizontalAlignment : Text.AlignHCenter

verticalAlignment : Text.AlignVCenter

font.pixelSize: 15

text : (parseInt (timerCanvas.setSecond / 60 )).toString().padStart(2 ,'0' )

+ ":" + (parseInt (timerCanvas.setSecond % 60 )).toString().padStart(2 ,'0' )

}

}마지막으로 Spinbox와 Button들입니다. spinbox는 말 그대로 시간을 설정해 주고 이 시간 값을 바꿀 때마다 시계를 갱신해 주기 위해서 timerCanvas에 requestPaint를 호출하게 됩니다.

spinbox는 editable property를 true로 설정해 Keyboard 입력이 가능하게 해 주었고 큰 Enter키(Qt.Key_Return), 작은 Enter키(Qt.Key_Enter)를 누르면 Focus를 해제시키게 하였습니다.

Button들에서는 signal을 날려주고 이 signal은 TimeTimerMgr에서 connect 되어 Slot함수를 호출하게 됩니다.

SpinBox {

id :spinBox

Layout.alignment: Qt.AlignHCenter

Layout.preferredHeight:35

from : 0

value : 60

to : 60

stepSize : 1

editable : true

onValueChanged : {

timerCanvas.setMinute = value

timerCanvas.requestPaint()

}

Keys.onPressed: {

if ( event.key === Qt.Key_Enter || event.key === Qt.Key_Return ) {

focus = false

}

}

}

RowLayout {

spacing : 10

Layout.alignment: Qt.AlignHCenter

Button {

Layout.preferredWidth: 40

Layout.preferredHeight:30

icon.name: "start"

icon.source: "qrc:/icon/start.png"

onClicked : {

windows.emit_Start()

}

}

Button {

Layout.preferredWidth: 40

Layout.preferredHeight:30

icon.name: "pause"

icon.source: "qrc:/icon/pause.png"

onClicked : {

windows.emit_Pause()

}

}

Button {

Layout.preferredWidth: 40

Layout.preferredHeight:30

icon.name: "reset"

icon.source: "qrc:/icon/reset.png"

onClicked : {

windows.emit_Stop()

}

}

}다음으로 main 함수입니다. QQmlApplicationEngine을 관리 객체로 넘겨주고 있습니다.

#include <QGuiApplication>

#include <QQmlApplicationEngine>

#include <QQmlContext>

#include "TimeTimerMgr.h"

int main (int argc, char *argv[])

#if QT_VERSION < QT_VERSION_CHECK(6, 0, 0)

QCoreApplication::setAttribute (Qt::AA_EnableHighDpiScaling);

#endif

QGuiApplication app (argc, argv) ;

QQmlApplicationEngine engine;

const QUrl url (QStringLiteral("qrc:/TimeTimer.qml" )) connect (&engine, &QQmlApplicationEngine::objectCreated, &app,

[url](QObject *obj, const QUrl &objUrl) {

if (!obj && url == objUrl)

QCoreApplication::exit (-1 );

}

, Qt::QueuedConnection);

engine.load (url);

TimeTimerMgr timerMgr;

timerMgr.SetEngine (&engine);

return app.exec ();

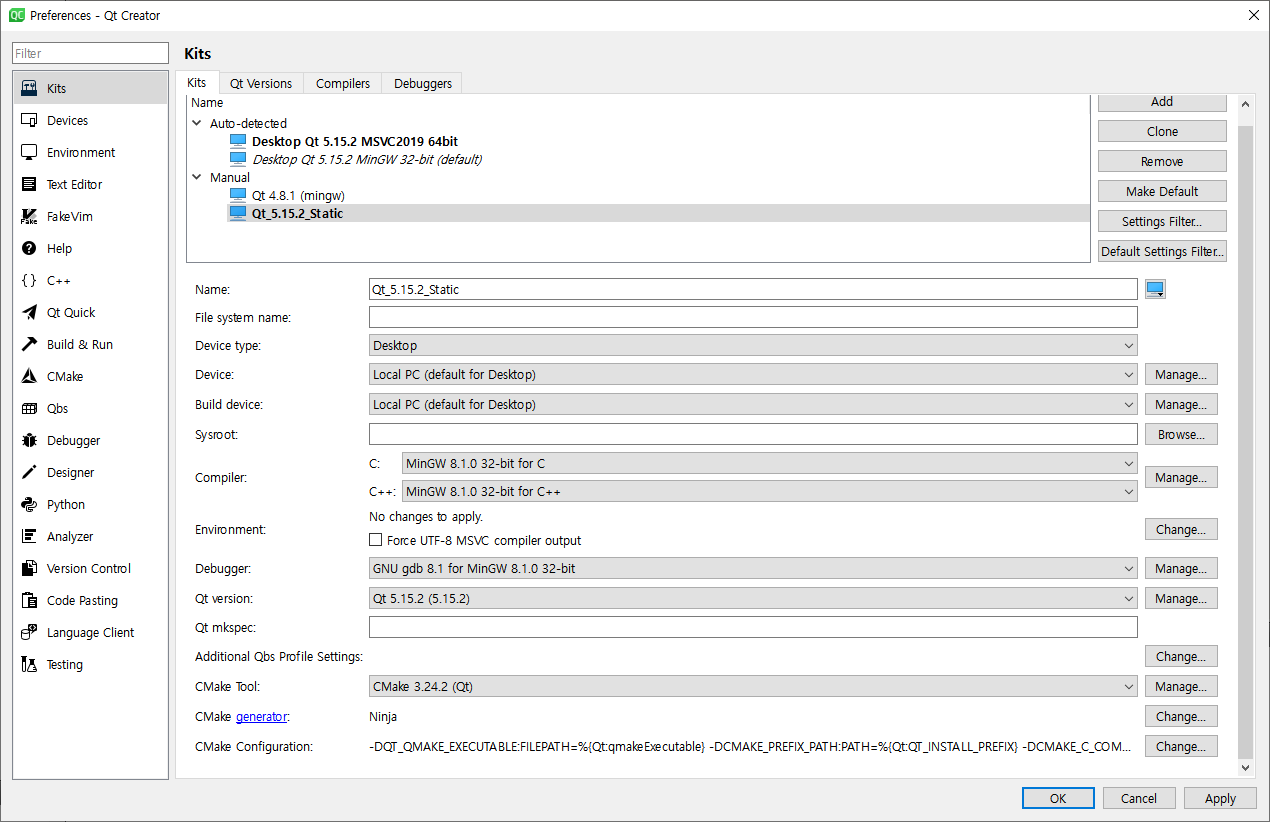

}다음으로 TimeTimerMgr 헤더 파일입니다. 여기에 설정 버튼을 클릭했을 때 호출할 Q_INVOKABLE 함수가 선언되어 있고 각 오브젝트들을 받을 수 있게 QObject 멤버와 QQuickWindows도 가지고 있습니다.

#ifndef TIMETIMERMGR_H

#define TIMETIMERMGR_H

#include <QObject>

#include <QTimer>

#include <QQmlApplicationEngine>

#include <QQmlContext>

#include "ConfigureDialog.h"

class TimeTimerMgr :public QObject

{

Q_OBJECT

public :

explicit TimeTimerMgr (QObject *parent = nullptr ) TimeTimerMgr ();

void SetEngine (QQmlApplicationEngine *engine) void InitIteragte () Q_INVOKABLE void showDialog () ;

public slots:

void Slot_Start () void Slot_Pause () void Slot_Stop () void Slot_Timeout () void Slot_GetColor (QColor color) void Slot_GetOpacity (double dOpacity) void Slot_GetAlwaysOnTop (bool bChecked) private :

ConfigureDialog* pDialog;

QQmlApplicationEngine* m_engine;

QQuickWindow* m_pWindow;

QObject* m_pTimeCanvas;

QTimer timer;

int m_nTime;

int m_nCount;

};

#endif 다음으로 TimeTimerMgr cpp 코드입니다.

#include "TimeTimerMgr.h"

#include <QQmlProperty>

TimeTimerMgr::TimeTimerMgr (QObject *parent)

: QObject{parent}

, m_pDialog (NULL )

, m_engine (NULL )

, m_pWindow (NULL )

, m_pTimeCanvas (NULL )

, m_nTime (0 )

, m_nCount (0 )

{

connect (&timer, SIGNAL (timeout ()), this , SLOT (Slot_Timeout ()), Qt::UniqueConnection);

}

void TimeTimerMgr::showDialog ()

if ( m_pDialog != NULL ) { return ; }

m_pDialog = new ConfigureDialog (this , m_engine);

connect (m_pDialog, SIGNAL (Emit_setColor (QColor)), this , SLOT (Slot_GetColor (QColor)), Qt::UniqueConnection);

connect (m_pDialog, SIGNAL (Emit_setOpacity (double )), this , SLOT (Slot_GetOpacity (double )), Qt::UniqueConnection);

connect (m_pDialog, SIGNAL (Emit_setAlwaysOnTop (bool )), this , SLOT (Slot_GetAlwaysOnTop (bool )), Qt::UniqueConnection);

connect (m_pDialog, SIGNAL (Emit_Close ()), this , SLOT (Slot_DialogClose ()), Qt::UniqueConnection);

TimerSetting settingValue;

settingValue.color = m_pTimeCanvas->property ("timeColor" ).value<QColor>();

settingValue.opacity = m_pWindow->property ("dOpacity" ).toDouble ();

settingValue.bAlwaysOnTop = m_pWindow->property ("bAlwaysOnTop" ).toBool ();

m_pDialog->Show ();

m_pDialog->SetSettingValue (settingValue);

}

void TimeTimerMgr::Slot_Start ()

property ("setMinute" ).toInt ();

timer.start (1000 );

}

void TimeTimerMgr::Slot_Pause ()

stop ();

}

void TimeTimerMgr::Slot_Stop ()

stop ();

m_pTimeCanvas->setProperty ("setSecond" , (m_nTime*60 ));

QMetaObject::invokeMethod (m_pTimeCanvas, "rePaint" );

m_nCount = 0 ;

}

void TimeTimerMgr::Slot_Timeout ()

setProperty ("setSecond" , (m_nTime*60 -m_nCount));

QMetaObject::invokeMethod (m_pTimeCanvas, "rePaint" );

if ( m_nTime*60 == m_nCount ) {

timer.stop ();

}

}

void TimeTimerMgr::Slot_GetColor (QColor color)

setProperty ("timeColor" , color);

QMetaObject::invokeMethod (m_pTimeCanvas, "rePaint" );

}

void TimeTimerMgr::Slot_GetOpacity (double dOpacity)

setProperty ("dOpacity" , dOpacity);

}

void TimeTimerMgr::Slot_GetAlwaysOnTop (bool bChecked)

setProperty ("bAlwaysOnTop" , bChecked);

m_pWindow->setFlag (Qt::WindowStaysOnTopHint, bChecked);

}

void TimeTimerMgr::Slot_DialogClose ()

NULL ;

}

TimeTimerMgr::~TimeTimerMgr ()

{

if ( m_pDialog != NULL ) {

delete m_pDialog;

m_pDialog = NULL ;

}

}

void TimeTimerMgr::SetEngine (QQmlApplicationEngine* engine)

InitIteragte ();

}

void TimeTimerMgr::InitIteragte ()

if ( m_engine == NULL ) { return ; }

m_engine->rootContext ()->setContextProperty ("timerMgr" ,this );

QList<QObject*> objectList = m_engine->rootObjects ();

if ( objectList.count () < 1 ) { return ; }

m_pWindow = qobject_cast<QQuickWindow*>(objectList.value (0 ));

if ( m_pWindow == NULL ) { return ; }

connect (m_pWindow, SIGNAL (emit_Start ()), this , SLOT (Slot_Start ()), Qt::UniqueConnection);

connect (m_pWindow, SIGNAL (emit_Pause ()), this , SLOT (Slot_Pause ()), Qt::UniqueConnection);

connect (m_pWindow, SIGNAL (emit_Stop ()), this , SLOT (Slot_Stop ()), Qt::UniqueConnection);

m_pTimeCanvas = m_pWindow->findChild<QObject*>("timeCanvas" );

}여기도 함수를 좀 나눠서 살펴보겠습니다. main에서 engine을 따로 넘겨주고 있고 이 engine의 rootContext에 TimeTimeMgr 객체를 등록했습니다. 이로써 TimeTimer.qml에서 TimeTimerMgr의 Q_INVOKABLE 함수를 호출할 수 있게 됩니다. 그리고 버튼들을 클릭했을 때 발생하는 SIGNAL을 SLOT들과 connect 시켜줍니다.

void TimeTimerMgr::SetEngine (QQmlApplicationEngine* engine)

InitIteragte ();

}

void TimeTimerMgr::InitIteragte ()

if ( m_engine == NULL ) { return ; }

m_engine->rootContext ()->setContextProperty ("timerMgr" ,this );

QList<QObject*> objectList = m_engine->rootObjects ();

if ( objectList.count () < 1 ) { return ; }

m_pWindow = objectList.value (0 );

if ( m_pWindow == NULL ) { return ; }

connect (m_pWindow, SIGNAL (emit_Start ()), this , SLOT (Slot_Start ()), Qt::UniqueConnection);

connect (m_pWindow, SIGNAL (emit_Pause ()), this , SLOT (Slot_Pause ()), Qt::UniqueConnection);

connect (m_pWindow, SIGNAL (emit_Stop ()), this , SLOT (Slot_Stop ()), Qt::UniqueConnection);

m_pTimeCanvas = m_pWindow->findChild<QObject*>("timeCanvas" );

}다음으로 Slot 함수들입니다. Slot들을 통해 timeCanvas가 가지고 있는 property에 접근하고 있습니다. 추가로 AlwaysonTop 체크 여부는 받아 Window의 flag로 넘겨줍니다.

void TimeTimerMgr::Slot_Start ()

property ("setMinute" ).toInt ();

timer.start (1000 );

}

void TimeTimerMgr::Slot_Pause ()

stop ();

}

void TimeTimerMgr::Slot_Stop ()

stop ();

m_pTimeCanvas->setProperty ("setSecond" , (m_nTime*60 ));

QMetaObject::invokeMethod (m_pTimeCanvas, "rePaint" );

m_nCount = 0 ;

}

void TimeTimerMgr::Slot_Timeout ()

setProperty ("setSecond" , (m_nTime*60 -m_nCount));

QMetaObject::invokeMethod (m_pTimeCanvas, "rePaint" );

if ( m_nTime*60 == m_nCount ) {

timer.stop ();

}

}

void TimeTimerMgr::Slot_GetColor (QColor color)

setProperty ("timeColor" , color);

QMetaObject::invokeMethod (m_pTimeCanvas, "rePaint" );

}

void TimeTimerMgr::Slot_GetOpacity (double dOpacity)

setProperty ("dOpacity" , dOpacity);

}

void TimeTimerMgr::Slot_GetAlwaysOnTop (bool bChecked)

setProperty ("bAlwaysOnTop" , bChecked);

m_pWindow->setFlag (Qt::WindowStaysOnTopHint, bChecked);

}마지막으로 설정 Button을 클릭했을 때 Dialog를 띄우기 위한 함수입니다. property의 정보들을 Dialog에 넘겨주고 있습니다.

void TimeTimerMgr::showDialog ()

new ConfigureDialog (this , m_engine);

connect (pDialog, SIGNAL (Emit_setColor (QColor)), this , SLOT (Slot_GetColor (QColor)), Qt::UniqueConnection);

connect (pDialog, SIGNAL (Emit_setOpacity (double )), this , SLOT (Slot_GetOpacity (double )), Qt::UniqueConnection);

connect (pDialog, SIGNAL (Emit_setAlwaysOnTop (bool )), this , SLOT (Slot_GetAlwaysOnTop (bool )), Qt::UniqueConnection);

TimerSetting settingValue;

settingValue.color = m_pTimeCanvas->property ("timeColor" ).value<QColor>();

settingValue.opacity = m_pWindow->property ("dOpacity" ).toDouble ();

settingValue.bAlwaysOnTop = m_pWindow->property ("bAlwaysOnTop" ).toBool ();

pDialog->Show ();

pDialog->SetSettingValue (settingValue);

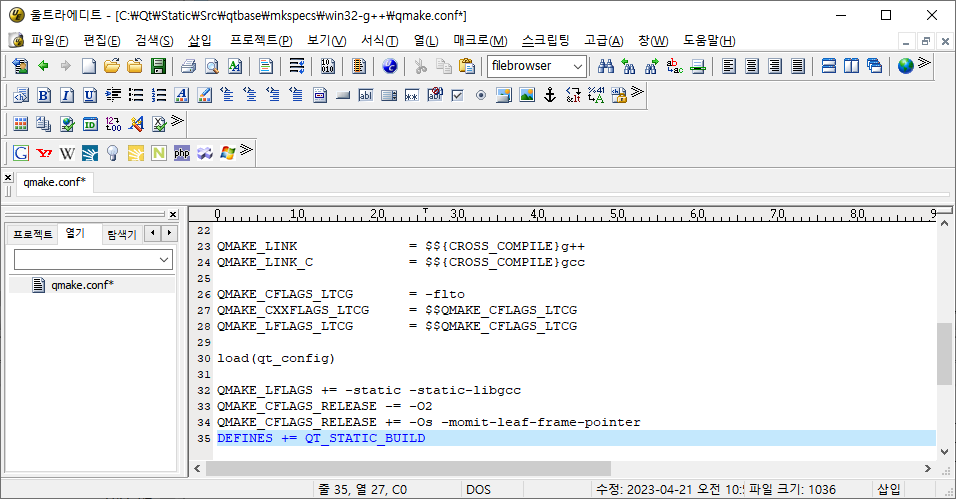

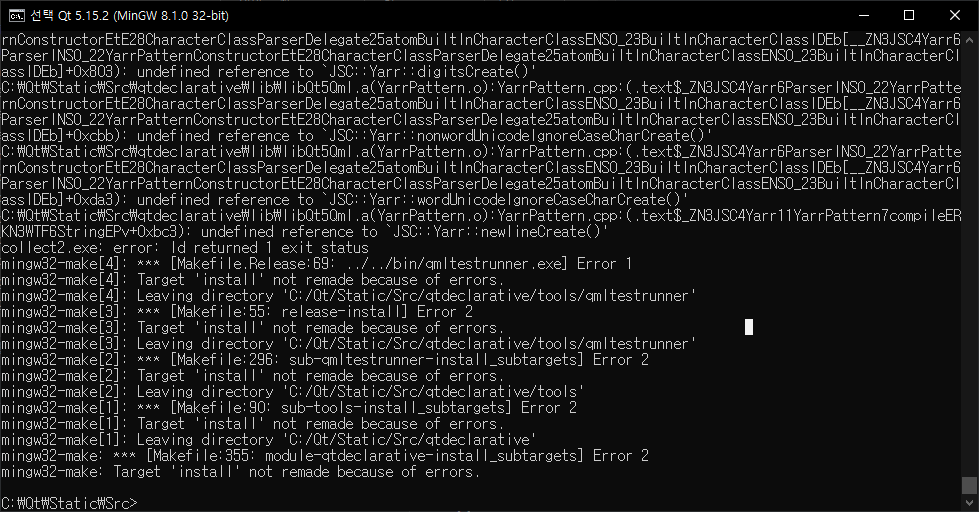

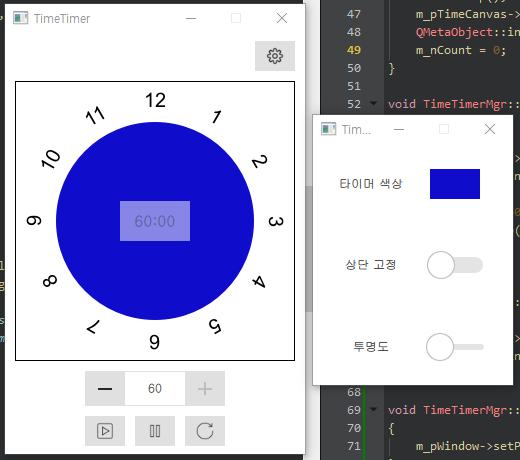

}다음은 Dialog UI입니다. 버튼을 클릭하면 Window가 띄워지고 해당 UI에는 Timer 색상 설정을 위한 Button과 AlwaysOnTop을 위한 Switch, 마지막으로 투명도 설정을 위한 Slidebar가 있습니다.

여기에선 colorDialog가 사용되고 있고 colorDialog 확인 버튼 클릭 시 onAccepted가 호출되게 됩니다. 그리고 signal들은 ConfigureDialog 객체를 통해 TimeTimerMgr로 전달되고 Time Time.qml의 Property가 설정됩니다.

import QtQuick 2.15

import QtQuick.Window 2.15

import QtQuick.Controls 2.15

import QtQuick.Layouts 1.15

import QtQuick.Dialogs 1.3

Window {

id : settingDlg

property int winWidth : 200

property int winHeight : 240

property color backColor : "black"

property double dOpacity: 1

property bool bAlwaysOnTop : false

width : winWidth

height : winHeight

minimumWidth : winWidth

minimumHeight : winHeight

maximumWidth : winWidth

maximumHeight : winHeight

signal emit_changeColor(color back);

signal emit_changeOpcaity(double dOpacity);

signal emit_changeAlwaysOnTop(bool bChecked);

GridLayout {

anchors.fill: parent

anchors.centerIn: parent

columnSpacing : 0

columns : 2

Layout.alignment: Qt.AlignCenter

property int nWidth : 50

property int nHeight : 30

Label {

Layout.preferredWidth: parent.nWidth

Layout.preferredHeight: parent.nHeight

Layout.alignment: Qt.AlignVCenter | Qt.AlignRight

text : "타이머 색상"

verticalAlignment : Text.AlignVCenter

horizontalAlignment : Text.AlignHCenter

}

Button {

id : colorBtn

Layout.preferredWidth: parent.nWidth

Layout.preferredHeight: parent.nHeight

Layout.alignment: Qt.AlignCenter

background : Rectangle {

color : backColor

}

onClicked : {

colorDialog.open()

}

}

Label {

text : "상단 고정"

Layout.preferredWidth: parent.nWidth

Layout.preferredHeight: parent.nHeight

Layout.alignment: Qt.AlignVCenter | Qt.AlignRight

verticalAlignment : Text.AlignVCenter

horizontalAlignment : Text.AlignHCenter

}

Switch {

Layout.preferredWidth: parent.nWidth

Layout.preferredHeight: parent.nHeight

Layout.alignment: Qt.AlignCenter

checked : bAlwaysOnTop

onCheckedChanged : {

settingDlg.emit_changeAlwaysOnTop(checked)

}

}

Label {

text : "투명도"

Layout.preferredWidth: parent.nWidth

Layout.preferredHeight: parent.nHeight

Layout.alignment: Qt.AlignVCenter | Qt.AlignRight

verticalAlignment : Text.AlignVCenter

horizontalAlignment : Text.AlignHCenter

}

Slider {

from :1

to :0

stepSize : 0.1

value : dOpacity

Layout.preferredWidth: parent.nWidth + 20

Layout.preferredHeight: parent.nHeight

Layout.alignment: Qt.AlignCenter

onValueChanged : {

console .log(value)

settingDlg.emit_changeOpcaity(value)

}

}

}

ColorDialog {

id :colorDialog

modality : Qt.WindowModal

title :"Timer Color"

color :backColor

onAccepted : {

backColor = colorDialog.color

settingDlg.emit_changeColor(backColor)

}

}

}Dialog를 띄우기 위한 ConfigureDialog 객체입니다. 여기서는 QQuickWindow를 멤버로 가지고 있습니다.

#ifndef CONFIGUREDIALOG_H

#define CONFIGUREDIALOG_H

#include <QObject>

#include <QQuickWindow>

#include <QQmlApplicationEngine>

typedef struct _TimerSetting {double opacity;

bool bAlwaysOnTop;

_TimerSetting() {

color.setRgb (0 ,0 ,0 );

opacity = 0 ;

bAlwaysOnTop = false ;

}

} TimerSetting;

class ConfigureDialog :public QObject

{

Q_OBJECT

public :

explicit ConfigureDialog (QObject *parent = nullptr , QQmlApplicationEngine* engine= NULL ) ConfigureDialog ();

void Show () void SetSettingValue (TimerSetting& setValue) void Emit_setColor (QColor color) void Emit_setOpacity (double dOpacity) void Emit_setAlwaysOnTop (bool bChecked) public slots:

void Slot_GetColor (QColor color) void Slot_GetOpacity (double dOpacity) void Slot_GetAlwaysOnTop (bool bChecked) private :

QQuickWindow* m_pView;

QQmlApplicationEngine* m_pEngine;

};

#endif ConfigureDialog의 cpp입니다. 다른 qml을 윈도우처럼 띄우기 위해 Component를 생성하였고 그 component를 QQuickWindow로 받아 띄워주게 됩니다.

#include "ConfigureDialog.h"

#include <QQmlComponent>

#include <QQmlContext>

ConfigureDialog::ConfigureDialog (QObject *parent, QQmlApplicationEngine *engine)

: QObject{parent}

, m_pView (NULL )

, m_pEngine (engine)

{

m_pView = new QQuickWindow ();

}

void ConfigureDialog::Show ()

if ( m_pView == NULL ) { return ; }

QQmlComponent comp (m_pEngine, QUrl(QStringLiteral("qrc:/SettingDialog.qml" ))) ;

m_pView = qobject_cast<QQuickWindow*>(comp.create (m_pEngine->rootContext ()));

connect (m_pView, SIGNAL (emit_changeColor (QColor)), this , SLOT (Slot_GetColor (QColor)), Qt::UniqueConnection);

connect (m_pView, SIGNAL (emit_changeOpcaity (double )), this , SLOT (Slot_GetOpacity (double )), Qt::UniqueConnection);

connect (m_pView, SIGNAL (emit_changeAlwaysOnTop (bool )), this , SLOT (Slot_GetAlwaysOnTop (bool )), Qt::UniqueConnection);

m_pEngine->rootContext ()->setContextProperty ("contorlDlg" ,this );

m_pView->show ();

}

void ConfigureDialog::SetSettingValue (TimerSetting &setValue)

setProperty ("backColor" , setValue.color);

m_pView->setProperty ("dOpacity" , setValue.opacity);

m_pView->setProperty ("bAlwaysOnTop" , setValue.bAlwaysOnTop);

}

void ConfigureDialog::Slot_GetColor (QColor color)

emit Emit_setColor (color) ;

}

void ConfigureDialog::Slot_GetOpacity (double dOpacity)

emit Emit_setOpacity (dOpacity) ;

}

void ConfigureDialog::Slot_GetAlwaysOnTop (bool bChecked)

emit Emit_setAlwaysOnTop (bChecked) ;

}

ConfigureDialog::~ConfigureDialog ()

{

if ( m_pView != NULL ) {

delete m_pView;

m_pView = NULL ;

}

}색상 값을 변경하면 자동으로 시계의 색상이 변경되게 됩니다.

풀 코드는 아래 Github 링크에서 확인하실 수 있습니다.

GitHub - psy1064/TimeTimer_Qt: TimeTimer Use Qt/QML

TimeTimer Use Qt/QML. Contribute to psy1064/TimeTimer_Qt development by creating an account on GitHub.

github.com

다른 기능이 추가된 아래 링크도 참고하시면 좋습니다.

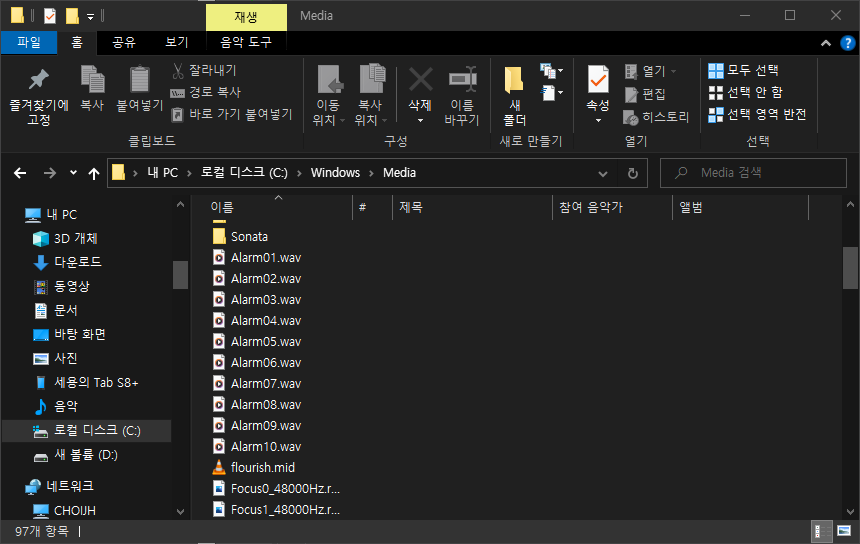

[Qt] QML(8) - SoundEffect, ComboBox ListModel 적용

이전 포스팅에서 ListView에 아이템을 QAbstractModel을 상속받은 클래스를 통해 동적으로 관리하는 예제를 진행해봤습니다. [Qt] QML(7) - ListView/Model C++ 로 처리하기 이전 포스팅에서 ListView를 QML로 보여

1d1cblog.tistory.com